July 2006

![]()

AutomatedBuildings.com

[an error occurred while processing this directive]

(Click Message to Learn More)

July 2006 |

[an error occurred while processing this directive] |

|

|

Daniel Amitai |

The main objective of an engineer troubleshooting a power quality event is to identify the source of the disturbance in order to determine the required corrective action. To identify the source, the engineer depends on recorded data captured by monitoring equipment.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

[an error occurred while processing this directive] |

The management demands a cost effective method to solve the problem as quick as possible. The electrical engineer speaks of installing instrumentation, collecting data, analyzing data, re-installing and re-analyzing. It is not uncommon for months to pass until the problem is isolated and a solution is implemented.

Power quality analysis has traditionally posed a unique challenge to the engineer, demanding an accurate assumption as to the dimensions of the disturbance in order to capture the event to memory for examination. The correct balance between memory size and the deviation of the disturbance from the norm is often elusive. Thresholds set too low capture too many events of little or no consequence, filling the memory before the sought after damaging event occurs. Setting the threshold too high can overshoot the event.

What is Data Compression Technology?

Revolutionary data compression technology takes the guess-work out of isolating the source of power quality problems by eliminating the need for devising set points and calculating threshold values.

The ability to capture all the wave form data in high resolution in it's entirety over an extended period of time is the only way to ensure that the event will be recorded, allowing the engineer to analyze the data and define a solution.

Until now, monitoring and analyzing system electrical trends have presented a true challenge because certain data compromises were required to counteract capacity, processing and physical limitations. Data compression technology provides unlimited capacity for power quality data storage. This means that you are no longer required to set constraints on system data, rendering the risk in data selection based on set thresholds and triggers obsolete.

Operators of electrical networks are constantly faced with power events and transient occurrences that affect power quality and heighten energy costs.

In the past, to determine whether such events reflect system trends or isolated incidents, electrical engineers relied on partial information indicating what events occurred and when; not all events were recorded due to data capacity limitations and missed thresholds. Now, by analyzing multi-point, time-synchronized real-time power quality data, you can actually reveal why all power events occur and what causes them.

In short, data compression technology pushes power quality analysis capabilities into the next generation.

Why Consider Data Compression Technology?

Data compression technology allows for both immediate power quality problem solving as well as for true proactive energy management. The ability to analyze total data any time enables energy managers to call up and analyze historic time-based energy consumption trends in order to make supply side decisions.

Data compression technology allows control over both the consumption and quality of the supplied energy.

[an error occurred while processing this directive] Considerations for optimal system functionality in diverse network topologies are based on the capabilities of the energy suppliers, service providers and industrial and commercial consumers of energy to provide power quality over time and to successfully analyze, predict and prevent energy events using multi-point, historic and true-time logged data.

Achieving Benefits

The US Department of Energy estimates that about $80 billion a year is lost to power quality issues. To reduce these losses, operators must identify the source of power events, identify the problem sources and prevent their reoccurrence (for example, the utility may be identified as the problem source, or if the failure occurs within the facility, the cause must be determined).

Problem sources are many and often reflect the need for predictive and preventative maintenance measures. Utility operators face problem sources such as capacity, weather conditions and equipment failures. Consumers suffer from equipment failures, faulty installations and incompatible equipment usage creating destructive resonant situations.

When effective monitoring is installed, power providers will strive to avoid negative impacts due to diminished quality and service capabilities, so as not to cause damages due to the following factors:

In industrial sectors:

Downtime

Product quality

Maintenance costs

Hidden costs (reputation, recall)

In commercial and service sectors:

Service stoppage

Service quality

Maintenance costs

Hidden costs (reputation, low customer satisfaction level)

Once a power quality event is fully characterized by accessing compressed power quality data, a solution can be implemented successfully.

Analysis Resources and Capabilities

Implementing data compression technology in your electrical installation means:

Everything you want to see is stored; there are no more data compromises to counter recording resolution and capacity issues

Years of data for every network cycle is available with no data gaps

Thresholds and triggers are no longer needed; missing events becomes a thing of the past

All data parameters are recorded; there is no need to select measurement parameters

Comprehensive power quality reporting and statistics for data analysis and report generation are accessible and organized

Multi-point time-synchronized recording provides a true snapshot for any period in the entire network

Tracing the Evolution of Power Quality Analysis Technologies

Over the years, various technologies have evolved for monitoring and logging power quality data. Surely, throughout this period, developers addressed the same challenges regarding potential power quality, data capacity and system trends. Ultimately, the analysis of sampled data serves to manage, maintain and optimize system operations and costs.

Four Technological Generations

It is possible to delineate four distinct generations in the development of power quality technology:

1st Generation, Power Meter/Monitor: the first-generation technologies provide display capabilities only. Utilizing an analog or digital technology, logging information is used for monitoring the system.

2nd Generation, Data Logger: the second-generation technologies use periodic logging mechanisms and present data in paper or paperless form. Still, the information is utilized for system monitoring only.

3rd Generation, Event Recorder/Power Quality Analyzer: the third-generation technologies require the setting of thresholds and triggers, which are always difficult to assess correctly given that memory capacity is finite and quickly filled. When values are set too low the capacity is filled instantly; when values are set too high very few events are recorded.

4th Generation, Power Quality Data Center: the fourth-generation technology provides limitless, continuous logging and storage of power quality data using data compression technology. Setting of parameter values, thresholds, triggers and other constraints on data are no longer required.

Additionally, the troubleshooter can determine why power quality events occur over the entire electrical network and then successfully identify what causes them, regardless of their cycle occurrence. This measurement and analysis technology enables the engineer to optimize electrical network efficiency and cut power quality losses by relying on the analysis of ungapped data.

Exploring Data Analysis Advantages

Analysis activities are revolutionarily optimized using new multi-point time-synchronization. In this way troubleshooters trace energy flows over the network during power events to determine event causes. In fact, it is important to log network energy flows when there are no events occurring. Also, logging is necessary at all other points while an event occurs at a specific point to correctly analyze the event.

During power quality events, impedances change. Using 4th-generation technology, it is possible to calculate impedances and perform accurate network simulations for comprehensive analysis.

The Multipoint Advantage: Example

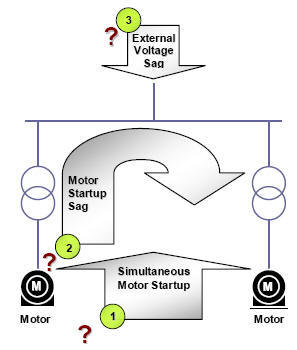

Example of Multipoint Analysis Advantage

Question: What was the source of the voltage sag?

Answer: The possible source could be either one, or a combination of the illustrated events. Other factors could also be involved. Monitoring multiple sites simultaneously and continuously allows the engineer to see the whole picture – all the time. Power quality events can be examined at the time of the event, and in the context of the time line before and after the event; comparing the impedances at this site at different times.

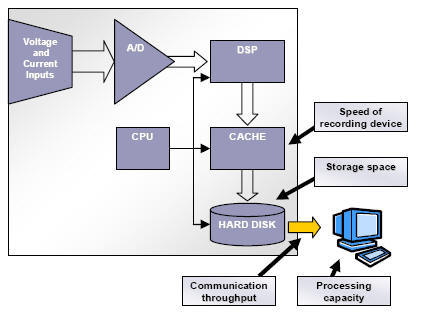

Data Bottlenecks without Data Compression

Data Bottlenecks without Data Compression

Question: What causes data bottlenecks when logging data with non-compression technologies?

Answer: Data bottlenecks with non-compression technologies are caused by limitations in recording speed capabilities, storage space, communication throughput and computer processing capacity.

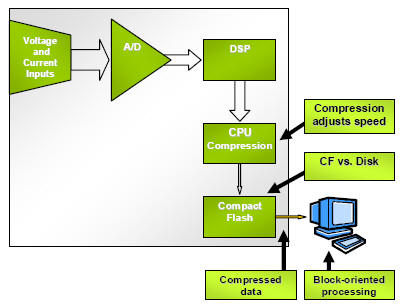

Eliminating Data Bottlenecks with Data Compression Technology

Eliminating Data Bottlenecks with Data Compression

Technology

Question: How are data bottlenecks eliminated by using data compression technology?

Answer: Data bottlenecks in the logging process are eliminated using data compression technology: the CPU compresses the data; a Compact Flash is utilized instead of a hard disk; data is compressed so capacity is not a factor; in addition, block-oriented processing is implemented.

Implementing Data Compression Technology

Patent-pending PQZip data compression technology is employed by the Elspec G4400 Power Quality Data Center and implements:

Compression algorithm with typical 1000:1 compression ratio. This real time compression, performed independent of the sampling, prevents data gaps.

Multi-point implementation of time-synchronized devices over the entire grid shows the interactivity of the values recorded at the different points in the network at that point in time.

Infinite continuous logging and storage of data for total network analysis

[an error occurred while processing this directive] Full implementation of the new technology with the Elspec system provides all standards and options discussed in this white paper including historic and real-time data display, logging and storage capabilities and generation of reports and graphical formats for analysis of compressed and stored power quality data.

Summarizing Benefits

Of the four generations of technological evolution for storing power quality data for analysis, only 4th-generation data compression technology affords the unprecedented advantage of infinite, continuous logging and storage of high resolution data. Using this new technology avoids capacity issues and for this reason yielded data is entirely uncompromised. This represents a clear advantage when analyzing system power trends and events. The natural and desired outcome of in-depth system analysis is prediction and prevention of power events, reduced power costs and the constant supply of enhanced power quality.

About Elspec ®

Elspec develops, produces and markets comprehensive electrical power quality

solutions and sophisticated electrical network analysis technologies.

Implemented applications spanning the industrial, commercial and utility sectors

enhance electrical network quality and increase energy savings using advanced

network analysis tools.

The Elspec product family features: Equalizer real-time power quality enhancement system for optimal power quality; Activar power factor correction unit for unlimited transient-free operations; Elspec G4000 Power Quality Data Center for optimal power quality assurance using patent-pending PQZip compression technology for selection and endless storage of logged measurement data; PPQ-306 portable power quality analyzer for in-depth site analysis; PQSCADA measurement and analysis software for evaluating complex data in graphical format; Iron Core Reactors for harmonics filtration; MKP Capacitors with low-losses for reactive energy compensation.

[an error occurred while processing this directive]

[Click Banner To Learn More]

[Home Page] [The Automator] [About] [Subscribe ] [Contact Us]