October 2009

![]()

AutomatedBuildings.com

[an error occurred while processing this directive]

(Click Message to Learn More)

October 2009 |

[an error occurred while processing this directive] |

|

|

Bob Cutting, Vice President, Martin Verge-Ostiguy, |

We are witnessing a global surge in the use of more technologically efficient practices in new building construction. Advanced building automation enables the aggregation of data from a variety of sensors to provide more intelligent solutions for building security, safety and energy consumption. This worldwide quest for greener energy practices for building operations will be advanced by ongoing innovation and the increased adoption of a variety of advanced sensor technologies.

|

|

|

|

|

|

|

|

|

|

|

|

|

[an error occurred while processing this directive] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

[an error occurred while processing this directive] |

Our society’s commitment to reducing wasted energy

consumption across the vast square footage of office space demands that we not

be complacent in discovering newer, more efficient solutions for the years to

come, as technical innovation drives the continued reduction of a building’s

carbon footprint. For example, with lighting accounting for a large portion of

the average building’s energy consumption (up to 40%), it represents a

significant opportunity for energy reduction. Yet we don’t think twice about

utilizing standard motion detectors for more automated lighting controls. And

although PIR (passive infra-red) and ultrasound technologies have improved the

detection capabilities of these standard sensors, there remains a level of

inefficiency that is now being addressed.

Today’s Sensors

Common industrial indoor motion sensors are simple binary switches indicating

motion or no motion. These sensors have a long-standing history of a few basic

and unavoidable operational flaws. Environmental conditions such as movement

around but not in the room being monitored and sunlight changes on surfaces in

the room generate spurious false detections. Changes in airflow from cyclic

operation of HVAC systems also generate numerous false motion detections caused

by moving blinds, plants, curtains and from the air flow itself. All these false

detections repeatedly turn on lights, wasting electricity and reducing bulb

life.

More significant are the everyday occurrences when people actually do enter a

room, but only for a short period of time. The person that left their notebook

in the conference room enters for 5 seconds, causing the lights to turn on, then

the lights stay on for another 10 minutes depending on the time delay setting.

And what if the ambient light was already high enough for the person to easily

find that notebook? Why turn the lights on at all?

So why do we use motion sensors? First of all, they’re cheap. Secondly, they’re

relatively simple to install. And it’s easy to make the case that they

significantly reduce energy consumption since they accomplish the major task of

making sure lights don’t stay on all day and night for no reason. But why should

we stop there and ignore the inherent inefficiencies in these sensors?

Even 15 minutes of excess daily lighting usage per room, for a 20-story building

with 20 rooms per floor results in up to 5,000 KW excess per year. So even the

slightest increase in room occupancy detection accuracy is meaningful,

especially considering what this adds up to across a city block, a city, a

state, etc.

Essentially, today’s motion sensors don’t compensate for the

type of object

(person vs. plant) and the time the object remains in the monitored area. And

since these sensors only trigger on motion and don’t track anything, they are

ignorant to what is really happening in the room during periods of perceived

non-motion. So to be safe, time delays are built in to keep the lights on longer

than they need to be. And we should look beyond lighting to other applications

where a sensor could more intelligently control energy consumption. A good

example is HVAC controls. If used for that purpose, today’s motion sensors may

heat or cool a space for a single person entering a conference room. But motion

sensors also don’t compensate for quantity of objects, another limitation due to

their basic binary nature.

Vision for the Near Future

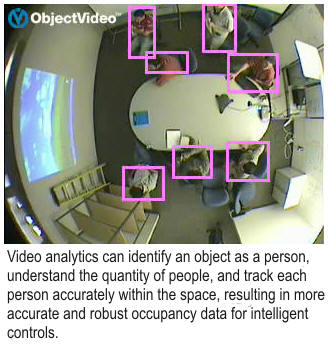

Vision-based

analytics currently addresses all the shortcomings of the common motion sensors.

First, video analytic technology classifies the objects being tracked and

implements various filters and calibration to distinguish between a person and a

sporadically moving curtain. Analytics actually track the objects detected,

enabling more intelligent controls such as establishing a minimum time in area

before lights are turned on. And once tracking an object that has been detected,

the intelligent technology keeps tracking the object even when it goes

motionless. With the ability to track multiple objects simultaneously, video

analytics is also able to be cognizant of the number of people within an area,

not just the fact that something moved.

Working with video from actual building footage compared to motion sensor

operation, ObjectVideo has used occupancy and people counting technology to show

significant reduction of false detections (i.e., not triggering for non-human

events) and better determination of occupancy with non-motion, thus potentially

avoiding the turning off of lights in a room when a person remains motionless

(and the subsequent waving of arms to turn the lights on again).

Working with video from actual building footage compared to motion sensor

operation, ObjectVideo has used occupancy and people counting technology to show

significant reduction of false detections (i.e., not triggering for non-human

events) and better determination of occupancy with non-motion, thus potentially

avoiding the turning off of lights in a room when a person remains motionless

(and the subsequent waving of arms to turn the lights on again).

While we can justify that even an incremental gain in performance from a single

detector could mean widespread reduction of energy consumption within an entire

building, surely that must come with a price. One cost factor is the analytic

software itself, being understandably more complex and likely more costly than

motion detection technology. But low software cost is achievable given the

potential volume from the expanded usage of advanced vision-based sensors for

intelligent buildings, and ObjectVideo understands the economics and addresses

it. The bigger factor is the hardware cost. Vision technology has not been

considered to date due to hardware costs to host the analytic software.

A reasonable vision sensor is a typical, low cost IP camera. But today’s camera

cost cannot compete with the much lower cost of a wall mount motion sensor. Plus

it’s a camera, which requires network bandwidth to stream video and introduces

privacy issues. For the world to realize the benefits of vision-based analytics,

the cost, bandwidth and privacy factors must all be addressed.

The

overall solution is referred to as a “vision-based sensor” for a reason.

ObjectVideo lives in the world of “video” analytics, but that is because it

relies on video from cameras to run algorithms to deliver on the typical

applications of detecting security breaches and counting people. But we should

not limit ourselves to cameras for smart building applications. What if we had a

basic vision sensor to capture video frames used solely for occupancy sensing

technology? There would be no video output, and no additional processing

required for any type of video encoding.

Innovation for this type of low-cost vision sensor to host occupancy sensing

analytics has already begun. Lyrtech in Quebec City, Canada, has recently

launched its first intelligent occupancy sensor (IOS) as a ready-to-deliver

prototype or a reference design for manufacturers looking to get into this

emerging market. The processing engine of the IOS is a digital signal processor

(DSP) based on the DaVinci technology from Texas Instruments, which is a

scalable, programmable SoC that integrates accelerators and peripherals

optimized for a broad spectrum of digital video equipment.

Innovation for this type of low-cost vision sensor to host occupancy sensing

analytics has already begun. Lyrtech in Quebec City, Canada, has recently

launched its first intelligent occupancy sensor (IOS) as a ready-to-deliver

prototype or a reference design for manufacturers looking to get into this

emerging market. The processing engine of the IOS is a digital signal processor

(DSP) based on the DaVinci technology from Texas Instruments, which is a

scalable, programmable SoC that integrates accelerators and peripherals

optimized for a broad spectrum of digital video equipment.

The IOS imager is designed as an independent module, which makes it easy to

replace to meet new technological challenges and offer different features. By

default, the IOS imager is equipped with a 180° field-of-view lens, which can

easily be augmented with infrared illuminators for low-light environments. The

imager of the reference design can output video through a composite video

interface for development or troubleshooting purposes, but a production IOS

version can eliminate the video output to address privacy requirements.

Furthermore, the IOS’ photocell helps the sensor determine ambient lighting

conditions.

Flexibility in communications is paramount to the potential widespread use of

the IOS. For that reason, the IOS is equipped with a 10/100BASE-T Ethernet

capable of power over Ethernet (PoE) to safely transfer electrical power, along

with data, to the IOS through a standard category 5 Ethernet cable. Other sensor

I/Os include ZigBee and Wi-Fi wireless interfaces, as well as a standard RS-232

interface. The IOS also features a CAN-bus, a vehicle bus standard designed to

allow microcontrollers and devices to communicate with each other without the

need for a host computer.

With a substantial portion of its interfaces being integrated to the onboard DSP,

as well as using a relatively small FPGA and mostly generic components, the IOS’

bill of materials is very low for a device of its kind, making it a logical

choice as a simple vision-based sensor over bulky and expensive intelligent or

photometric cameras and a financially viable option compared to today’s motion

sensors.

[an error occurred while processing this directive]

Beyond Green

The case for and value behind the use of analytics on a low cost vision

sensor is clearly there. Market feedback to date has been extremely supportive.

Companies including ObjectVideo and Texas Instruments have dedicated resources

to developing an intelligent occupancy market and are contributing to the

collective innovation to make it happen by creating more low cost processing

solutions to further the bill of materials (BOM) cost reduction.

As with any new technology, it will require more field testing, trials, etc. to

finalize the ROI and set the required market price. To help drive to that point,

we may see other commercial uses actually kick start the development and initial

volume delivery of the intelligent vision sensor. Retailers and casinos have

stepped up to do more with vision-based people counting. One obstacle for them

is a cost effective way to achieve full video coverage of an entire store or

casino floor. Multiple cameras positioned for people counting can be cost

prohibitive across the entire area. A series of low cost ceiling mount sensors

powered by PoE and connected over a lightweight ZigBee network, for example,

makes it possible. No video streaming and storage is required and the

operational count and occupancy data is streamed continuously from throughout

the store or casino. Vision sensors for basic commercial people counting

applications may actually be the first to move the needle for this advanced

approach to building automation.

Getting back to smarter buildings, the data that a vision sensor can generate

can be used for more than just energy saving controls. Space utilization

measurement often comes up in discussions that ObjectVideo has in the market,

again promoting the quantitative capabilities of video analytics. Other concepts

such as basic loitering detection to trigger virtual concierge support also

expand the list of possible uses.

In addition to the superior detection performance, the multi-use aspect of this

new breed of intelligent sensor accentuates its use as a hyper-efficient

occupancy sensor. The key takeaway is that an intelligent vision sensor with

cost comparable to today’s advanced PIR and ultrasound motion sensors is on the

near horizon, looking for manufacturers ready to take advantage of this exciting

new market.

[an error occurred while processing this directive]

[Click Banner To Learn More]

[Home Page] [The Automator] [About] [Subscribe ] [Contact Us]