It helps to think of cloud-native not as a technology per se, but more as a philosophy, a way of doing things. It’s a method of developing and deploying applications and sharing data across cloud architectures. And it’s an approach that will revolutionize the way our building systems communicate and function by bringing the full force of cloud-computing to building automation. But to fully appreciate the power of cloud-native, it helps to understand the cloud itself. So, if you haven’t already, check out my companion piece: What’s the Cloud? A Non-Techie Explanation.

Cloud-native is a concept we get from the IT and software development folks. It refers to a specific approach to developing and deploying applications. The cloud itself is a type of data architecture that provides the structure and resources for managing those applications (i.e., hard drives, servers, processing, etc.). In a cloud-native approach, apps are designed from the ground up to run “natively” inside a cloud environment. You can think of cloud-native apps like Formula 1 cars, which are specifically built to run on Formula 1 tracks (i.e., the cloud). Could you drive an F1 car on a NASCAR track? Yes, of course, but it wouldn’t perform as well because that’s not its “native” infrastructure.

Because they are made to run in the cloud environment, cloud-native apps offer many benefits. They’re easier to scale, deploy, and integrate than traditional apps. They gain these benefits from two cloud-native principles: microservices and containerization. Let’s take a quick look at how these development principles work, then we’ll look at how they can benefit building automation.

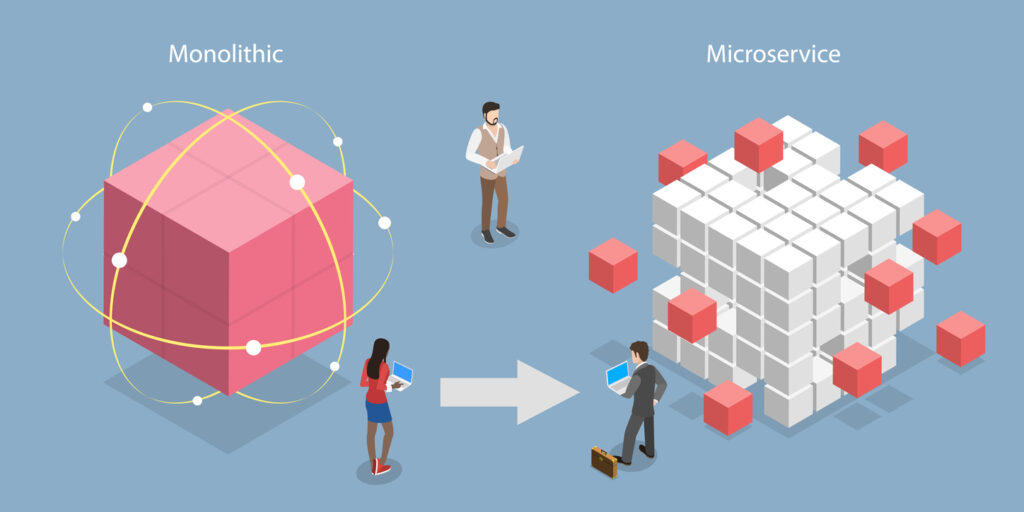

Microservices

Traditional development follows a monolithic architecture. The app is created as one single, tightly integrated unit. This keeps things nice and tidy, but it does pose problems when you need to make changes. Any change in the code results in changes throughout the entire project. All the app’s components and functionalities are considered “tightly coupled”. This makes it difficult and time intensive to modify or scale individual parts independently.

Cloud-native systems are designed using a microservices architecture. Instead of a monolithic system, components and functions are broken down into smaller, independent microservices. Each microservice performs a specific function and can be developed, deployed, and scaled independently. This approach enhances flexibility and modularity.

For example, an ecommerce app might have microservices that include a user authentication service or an order management service. In a cloud-native environment, these separate services could be changed or scaled independently, making deployment much easier, faster, and safer.

Containerization

Containerization is a crucial aspect of cloud-native development and deployment. As the name suggests, this feature of cloud-native apps means its microservices are packaged into discrete units. These “containers” can house the app’s code, its dependencies, libraries, and configurations. To better understand how containerization works, consider the analogy of real shipping containers.

Imagine you’re a shipping company trying to transport a variety of goods. You could load individual items onto the ship. However, each item has its own unique shape and size, making it time-consuming to load, unload, and organize. You would need to carefully arrange each item, securing them separately, and ensuring they fit into the available space. This process is labor-intensive, requires precise planning, and is often inefficient.

These “shipping” limitations are why standard shipping containers exist. With containers (i.e., cloud-native), you use standardized, stackable metal boxes of uniform size to pack the goods. The containers provide a consistent, self-contained environment to hold the cargo, regardless of what’s inside. This means you can easily load and unload (i.e., deploy) multiple containers onto the ship in less time.

In the same way, containerization offers a consistent and isolated runtime environment for each microservice, allowing it to run consistently across various environments, whether it’s a developer’s local machine, a test environment, or a production cloud platform. In short, a container will fit on any ship built to use them.

Orchestration

It’s probably clear by now that cloud-native is an approach that requires some serious organization. All these microservices, containers, and computational workloads need something directing where and when things happen. Luckily, this level of “orchestration” is already available and its open source!

Kubernetes is an open-source container orchestration platform. It was originally developed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF). Kubernetes is one of the most popular and widely used tools in the cloud-native ecosystem. The platform’s primary purpose is to automate the deployment, scaling, and management of containerized applications. It provides a robust framework for running containerized workloads across clusters of machines or nodes.

Cloud-Native Benefits: Baking a Cake vs Ordering One

When cloud-native development approaches take advantage of cloud architectures, they unlock powerful benefits, some we’ve already seen. But let’s look at a fuller list of implications for transitioning to a cloud-native approach.

We can understand the benefits of cloud-native approaches when combined with a cloud service architecture by using an analogy of baking a cake. In a traditional cake baking process, you would handle each cake individually from start to finish. You gather all the ingredients, mix the batter, and bake the cakes one by one in separate baking pans. Then, you’d frost and decorate each cake individually. This is equivalent to the monolithic approach to app design and deployment.

In the second approach, you could simply order a cake from a professional bakery (i.e., cloud services). Instead of making the cake from scratch, you could provide specific instructions (i.e., app code) about the type of cake, flavors, and decorations we wanted. The bakery then takes care of the entire process. In other words, you could offload the practical work of production and distribution to a third party. Why? Because the bakery is faster, cheaper, and more efficient.

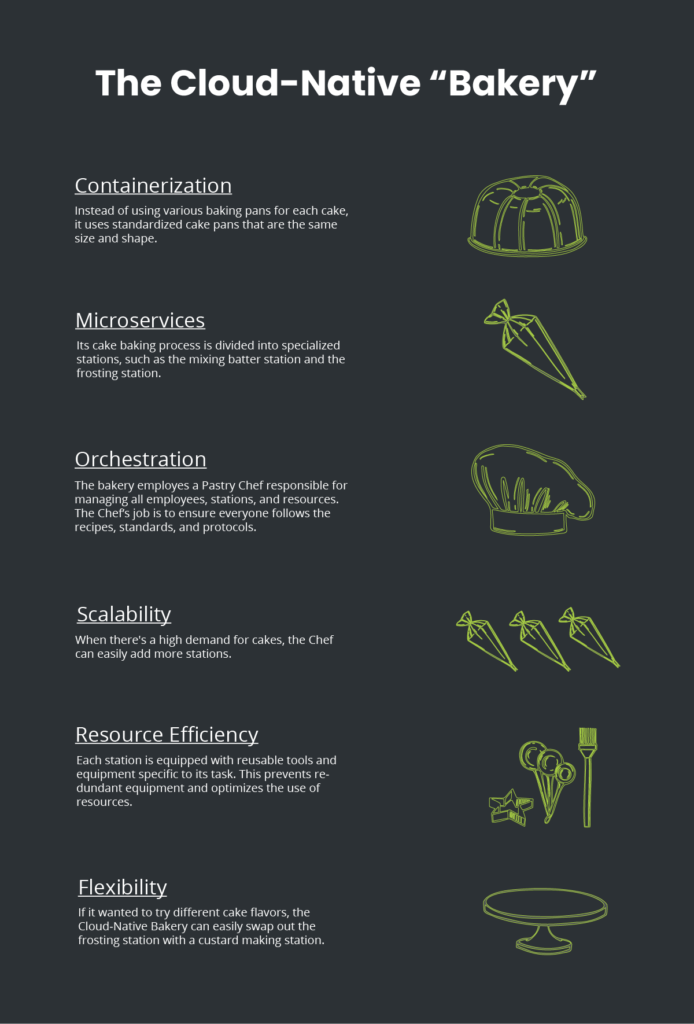

The Cloud-Native “Bakery” accomplishes these same benefits by utilizing cloud-native principles:

- Containerization: Instead of using various baking pans for each cake, it uses standardized cake pans that are the same size and shape.

- Microservices: Its cake baking process is divided into specialized stations, such as the mixing batter station and the frosting station.

- Orchestration: The bakery employes a Pastry Chef responsible for managing all employees, stations, and resources. The Chef’s job is to ensure everyone follows the recipes, standards, and protocols.

- Scalability: When there’s a high demand for cakes, the Chef can easily add more stations.

- Resource Efficiency: Each station is equipped with reusable tools and equipment specific to its task. This prevents redundant equipment and optimizes the use of resources.

- Flexibility: If it wanted to try different cake flavors, the Cloud-Native Bakery can easily swap out the frosting station with a custard making station.

By applying these same cloud-native principles to building automation, the industry could create systems that were more efficient, scalable, and flexible. However, the industry is still slaving away at batter mixing when we should be picking up the phone to order.

Building Automation

So, what does all this mean for building automation? Leveraging cloud-native approaches extends the capacity of building systems in almost every way, from energy efficiency to FDD. Cloud architectures and orchestration means computational work can happen anywhere: in the public cloud, private cloud, or on-premises. As we’ve seen, that’s helpful with data storage, but it also facilitates building functionality. For example, some critical system sensors and equipment need shorter latency times. Running data from a sensor to the cloud and back takes longer than doing the computational work locally.

Cloud-native approaches would ensure our automation efforts can leverage the full potential of cloud services. It will make our properties more flexible, easier to run, and more efficient.

To continue your understanding of cloud-native and it’s impacts on building automation, read Anto Budiardjo’s insightful piece The Building’s Journey to Cloud-Native and check out this episode of MondayLive!