|

July 2021

AutomatedBuildings.com

|

[an error occurred while processing this directive]

(Click

Message to Learn More)

|

Taming an unborn Beast

Artificial Intelligence (AI) is pervasive and is permeating all jobs and all walks of life in every industry.

|

Contributing Editor

|

Artificial

Intelligence (AI) is pervasive and is permeating all jobs and all walks

of life in every industry. It comes with huge power to automate mundane

jobs and create new conveniences and opportunities but it comes with

its limitations impacting lives when it is incorrect in its

predictions. But the AI that we work with is constantly learning and

adapting to recreate itself as it interacts with people and new

situations.

All AI is a Prediction

AI

is everywhere and it works by prediction. AI is trained with data we

feed it. Then when we engage it to work, it makes a prediction. Every

single time.

Ask

AI for a financial forecast, whether we should expect an upcoming

storm, or about how much power will be generated by wind for

sustainability. It will readily give us the answer. All of them are

predictions.

Critical answers from an AI such as whether someone has Covid or Alzimers is a prediction.

What appears as silly things with auto correct on our texts or search engines are all predictions.

Ask

Google search 'On Thursday I will' and it will say I will get paid. Ask

Google 'On Thursday you would' and it will say you will get

pregnant. That is a prediction too.

Why Should AI be Tamed?

AI is unleashing great power impacting lives and creating a new future for the world impacting everyone and everything.

AI

is being used to create synthetic media or deep fakes. This distorts

the idea of what is real news and creates fake news that propogates

misinformation campaigns in the wrong hands. AI can create artificial

human images and distort real videos such that we cannot see what is

real or doctored. The challenge with AI is that there are positive and

negative applications to AI. Listen to Manon De Dunne’s talk to

understand the power and challenges of synthetic media using artificial

intelligence below.

Taming

the AI, making it work is like trying to work with the future that has

not yet been created. It is about predicting the future before it is

built.

To

understand how we can manage AI we need to focus on three concepts that

are core to how AI is trained, runs predictions and learns continuously.

The Confusion Matrix of AI

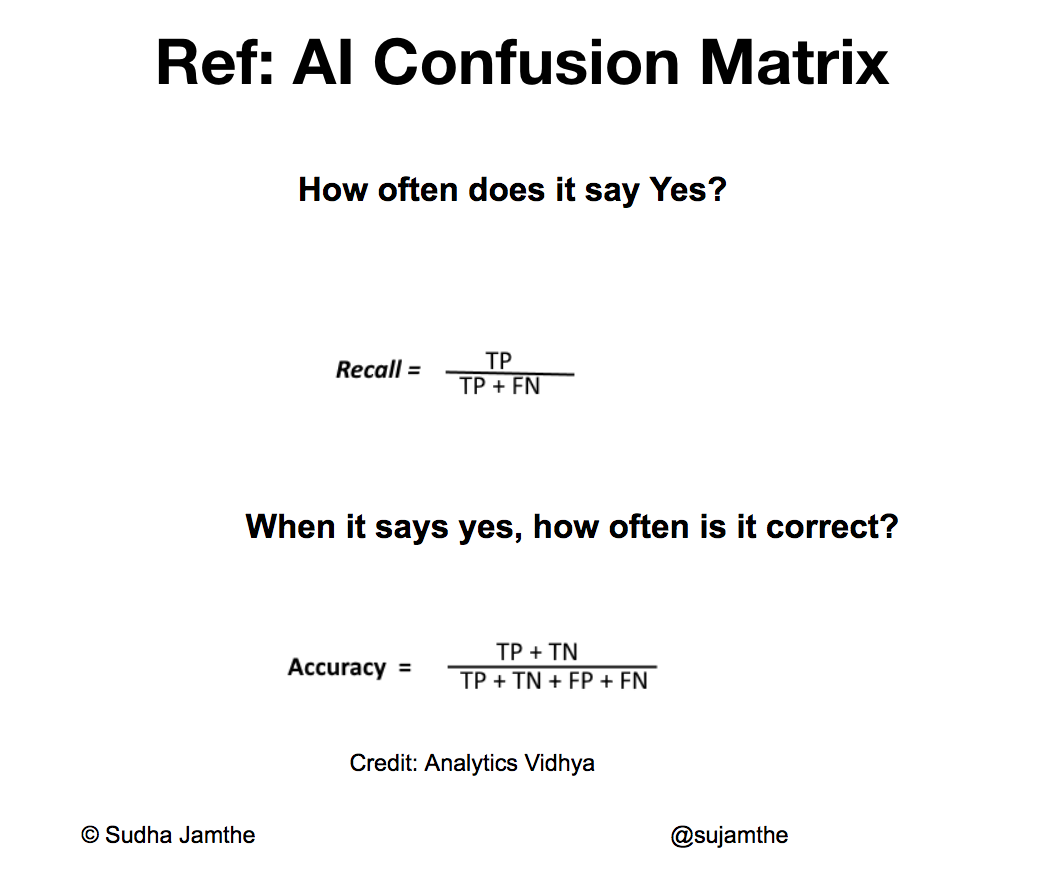

Everytime

an AI engages with us it makes a prediction which can be true or false

while the truth might be true or false. This leads to four possible

situations about the prediction of the AI. The result predicted can be

a ‘True Positive’, ‘True Negative’, ‘False Positive’ or ‘False

Negative.’

This is known as the Confusion Matrix of AI.

The

data scientist who builds AI tracks two metrics to track the AI

confusion matrix so they can get visibility into how accurately the AI

can make predictions. These are ‘Recall’ and 'Precision.’

Recall is how often the AI says Yes.

Accuracy or Precision is how often the AI is right when it says Yes.

So a low accuracy means that we cannot trust the predictions made by the AI.

The Ethical Principles of AI

Trustworthy:

When AI makes a decision, with the recall and precision metrix, we can

know how often to rely on the prediction made by the AI. These metrics

inform us about how much to trust the AI.

To

paraphrase privacy expert Jessica Groopman, she says “We are taking

AI’s advice as reference to make decisions but it is more of an

inference that the AI makes with a certain level of accuracy with room

for inaccurate predictions.”

Transparency:

Whenever an AI engages with a patient, or customer or that critical

system making life or death decisions, nobody knows how it made that

decision, what factors did it consider in making that prediction.

Fairness:

When the AI is built with a biased dataset that favors one group of

people over the other, which is typically the case today,

unfortunately, the AI gives more right answers to one group of people

and more wrong answers to other groups.

For

example, Amazon used a hiring algorithm that allowed for less women to

be selected in the hiring process than men. As the Gender Shades project

from Joy Buolamwini and Timnit Gebru showed, darker skin faces are less

recognized than lighter skin faces in all facial recognition systems

used in the US. AI predicts diseases correctly in the population it was

trained with and makes inaccurate predictions for the population that

were not well represented in its training data.

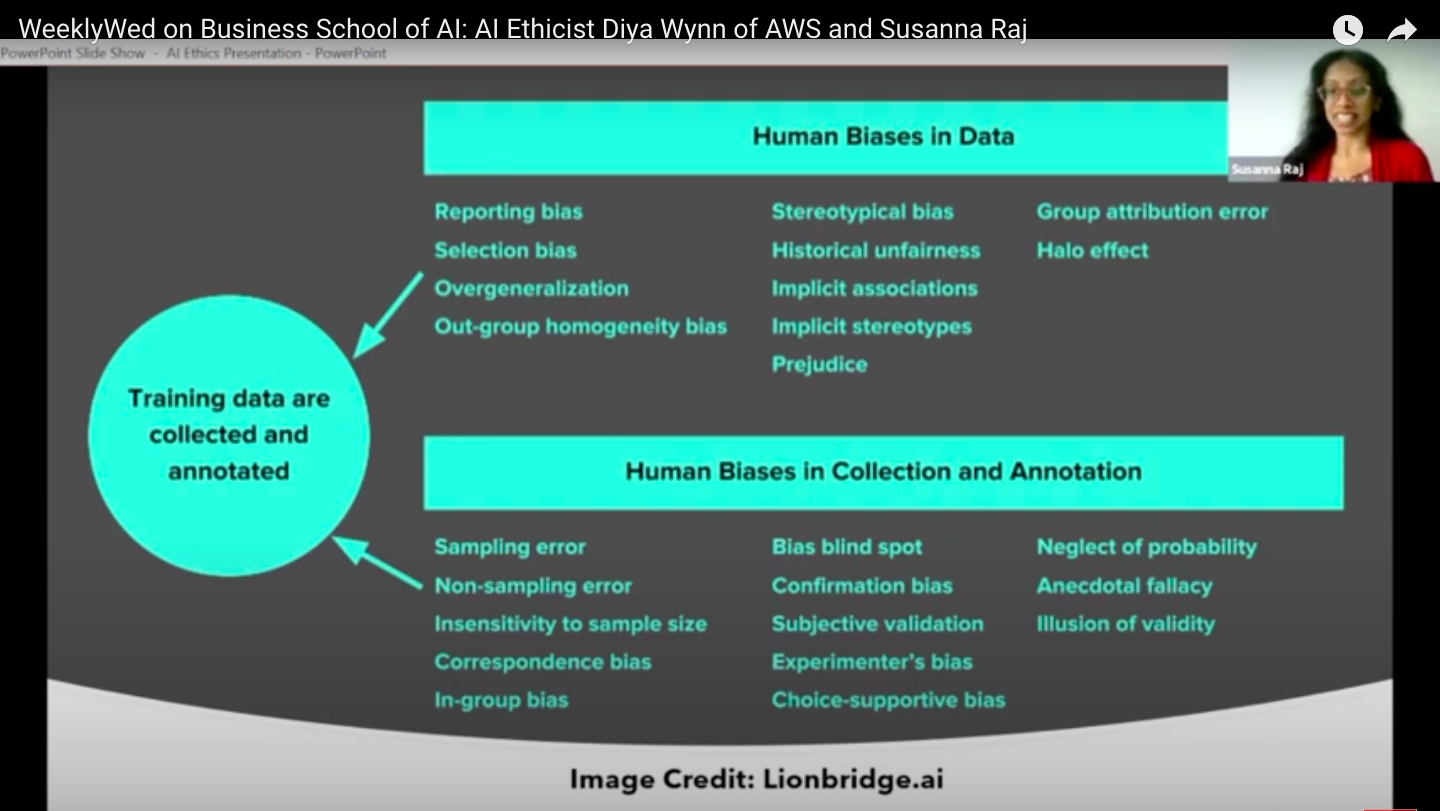

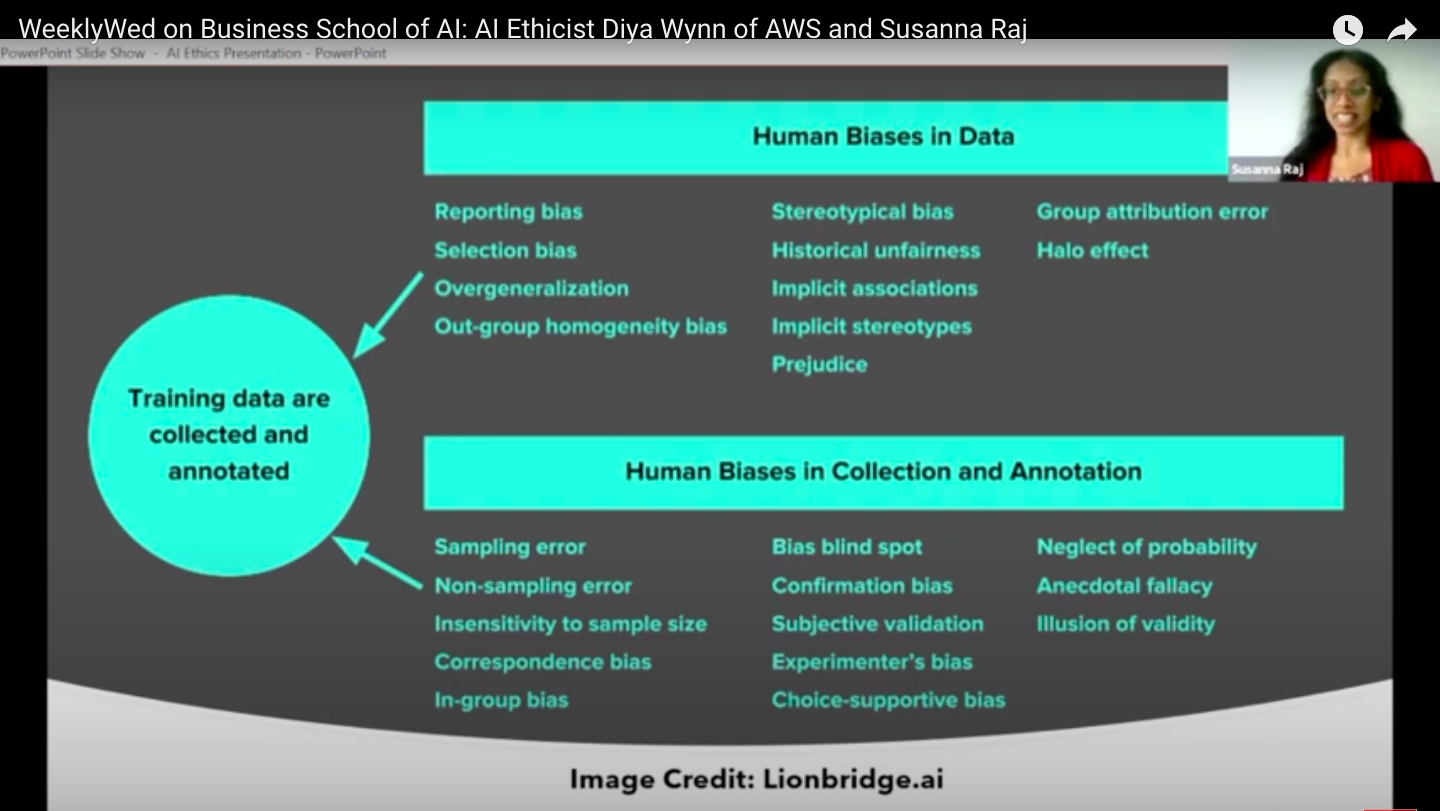

There

are several kinds of bias that creeps into the AI at every stage of the

AI lifecycle right from conception, data collection, labeling, model

selection all the way to engagement with users. See image below from AI

Ethicists Susanna Raj that shows the many possible types of bias that

creeps into AI.

Image: Susanna Raj session about data bias at Career Pivot to AI Ethics class at Business School of AI

The Learning AI

AI

is constantly Learning. AI learns continuously and retrains itself to

recreate itself with new data and new customers engaging with it. Each

time an AI interacts with a user, it gets new training data from the

interaction and this is used to re-train the AI to make a better

version of itself.

This

means that the AI that you will interact with tomorrow is not the same

as the AI that you are interacting with today. This is the power of AI

to adapt. Therein lies the challenge of managing the AI, taming the

beast that is not yet born.

How can we tame AI to work better for the future?

We

need to tame the AI to make it work for everyone without bias. We need

to improve AI from making incorrect interpretations that affect lives

and take away agency from any group of people.

As

much as AI is recreating itself by retraining itself, AI is creating a

new world every time you interact with it. It is predicting its results

based on who asks to open the door to be let into a building because of

the bias in its training data. This makes the AI give unpredictable

results in the future.

The

good news is that we can make AI make a better world by focusing on the

training data that trains the AI. This training data is what sets the

precision and recall to create the confusion matrix. This training data

is what contributes to the bias in the AI to act unfairly towards some

groups of people. This training data is what allows the AI to retrain

to become the AI that you will interact with in the future.

So

the best way forward is to focus on the training data and ensure AI

ethical principles of trust, transparency and fairness are incorporated

into the data and incorporate active bias mitigation at every stage of

the AI development lifecycle.

Listen to AI ethicist Diya Wynn from AWS’s talk about how to ensure that your customers build AI ethically in all industries.

The good news is that AI Ethics jobs are permeating all possible jobs in the technology in every industry (refer to these jobs here).

So you can participate in this journey to tame the AI to make it work

for all of us to create the better future that we each dream about.

Reference:

Proceedings of Machine Learning Research 81:1–15, 2018 Conference on Fairness, Accountability, and Transparency Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification by Joy Buolamwini and Timnit Gebru.

“Amazon scraps secret AI recruiting tool that showed bias against women,” Oct, 2018, by jeffrey-dastin

“Racial Discrimination in Face Recognition Technology” Oct 2020 by By Alex Najibi

Sudha

Jamthe is a Technology futurist who teaches professional learners to

create the connected, autonomous and ethical future with Artificial

Intelligence at Stanford Continuing Studies and Business school of AI.

[an error occurred while processing this directive]

footer

Overload. There are too many processes running under this userid. Please wait a minute and try again. (fork failed)

[Click Banner To Learn More]

[Home Page] [The

Automator] [About] [Subscribe

] [Contact

Us]